How to implement seq2seq attention mask conviniently? · Issue #9366 · huggingface/transformers · GitHub

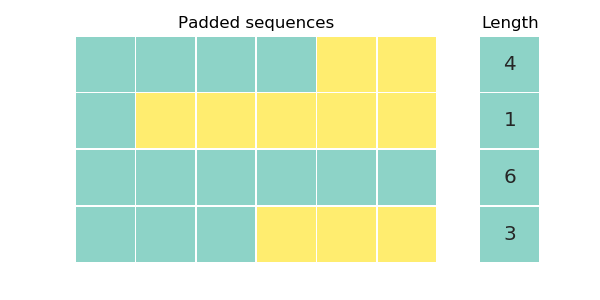

Illustration of the three types of attention masks for a hypothetical... | Download Scientific Diagram

Attention Wear Mask, Your Safety and The Safety of Others Please Wear A Mask Before Entering, Sign Plastic, Mask Required Sign, No Mask, No Entry, Blue, 10" x 7": Amazon.com: Industrial &

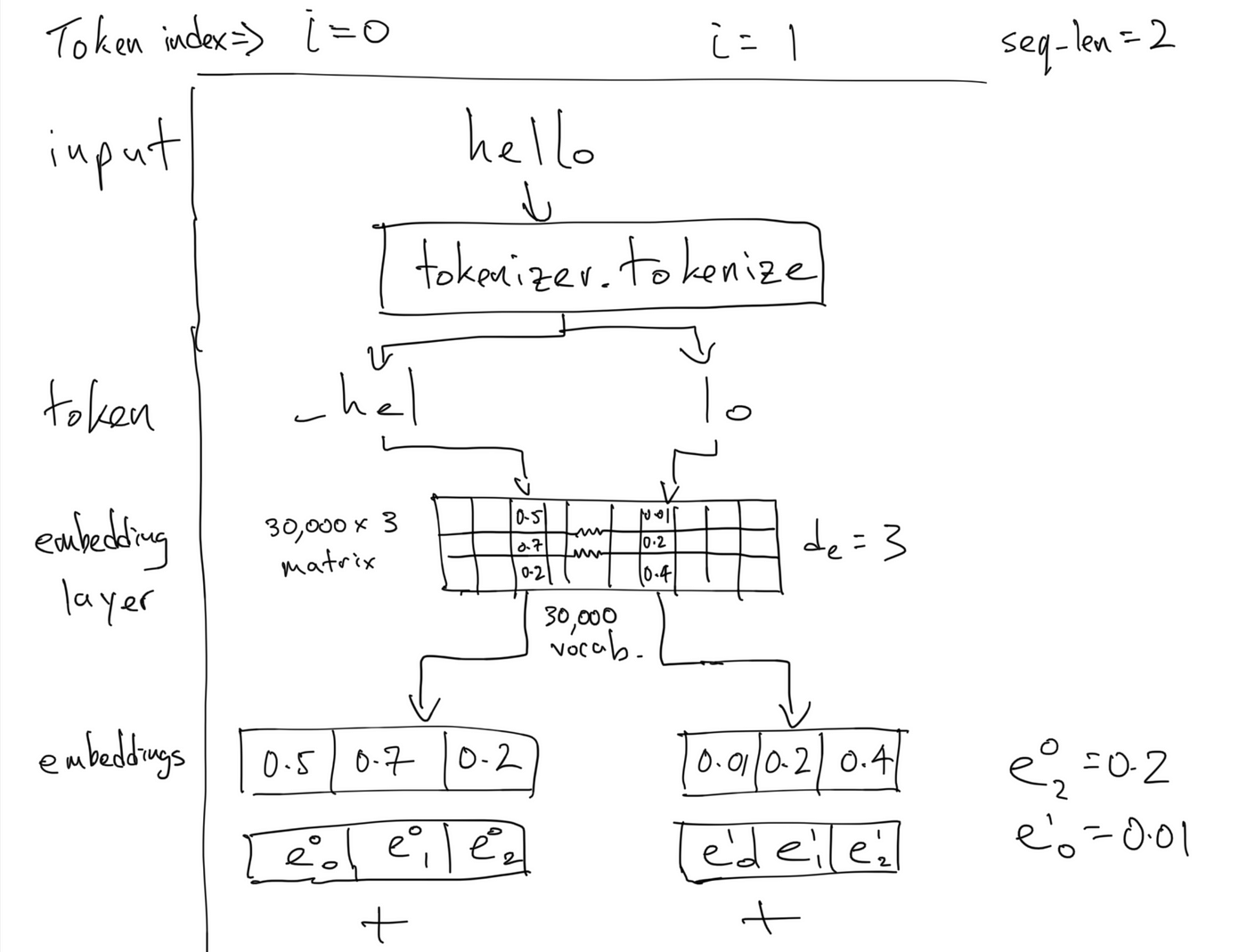

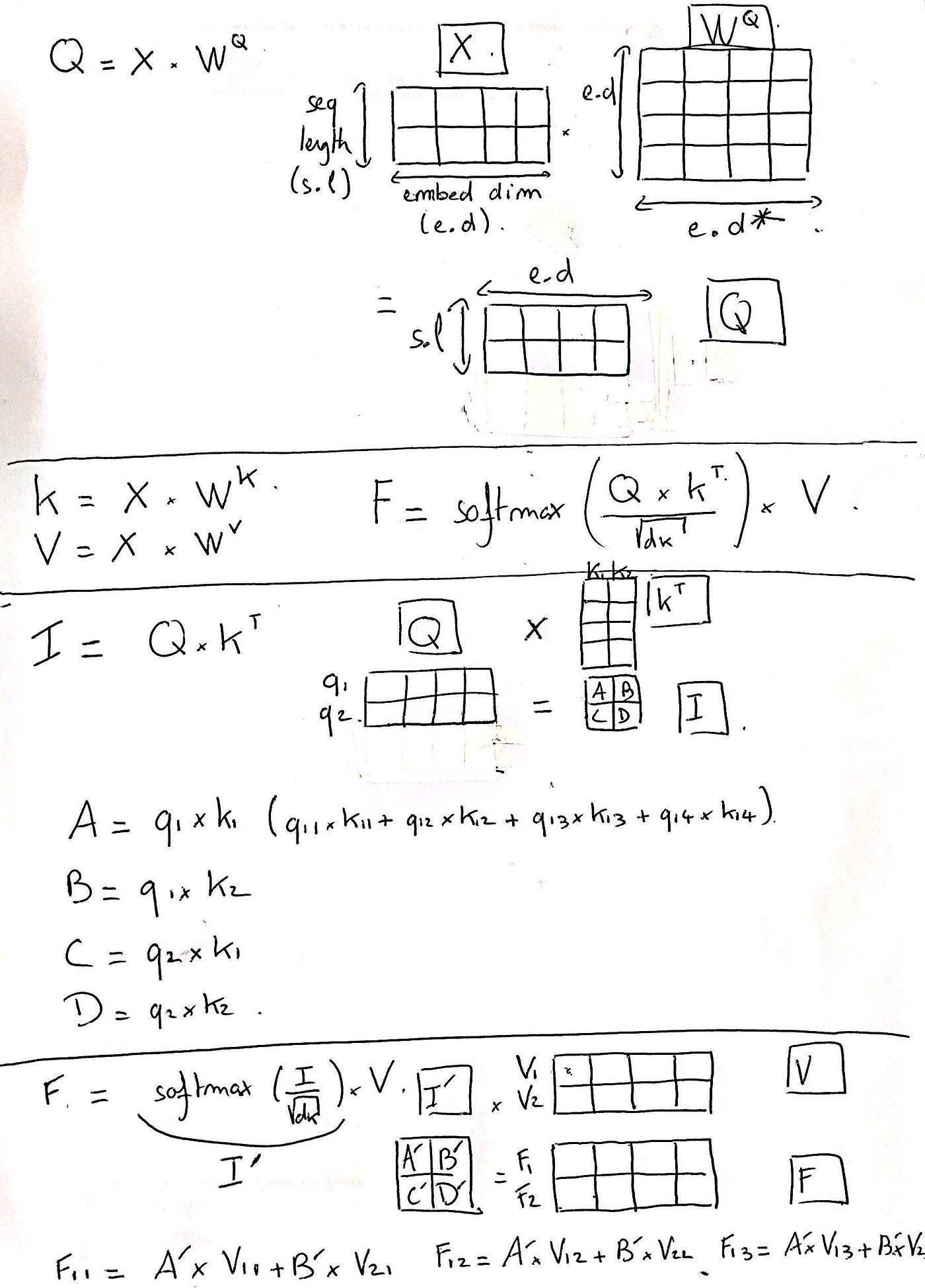

Positional encoding, residual connections, padding masks: covering the rest of Transformer components - Data Science Blog

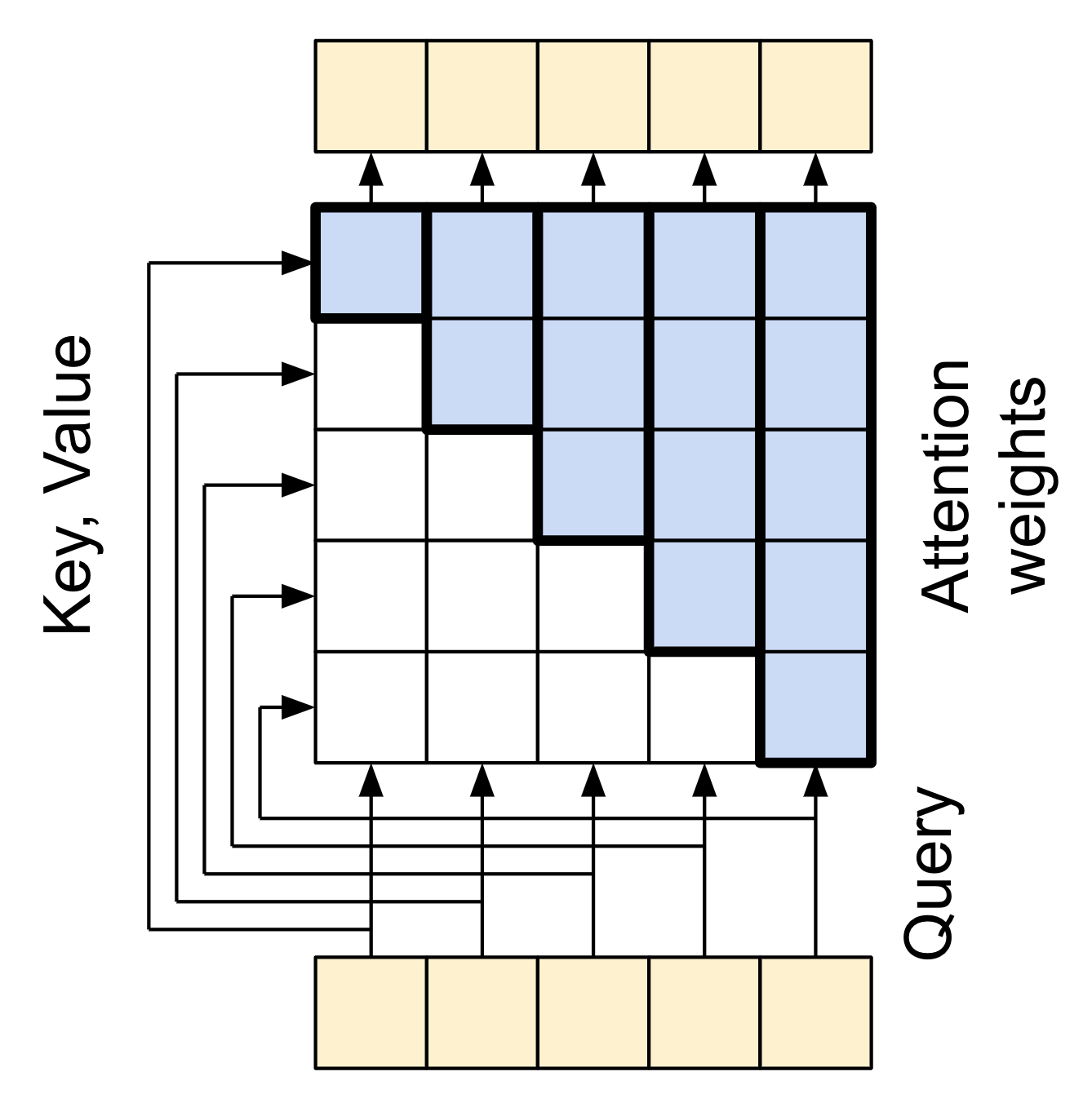

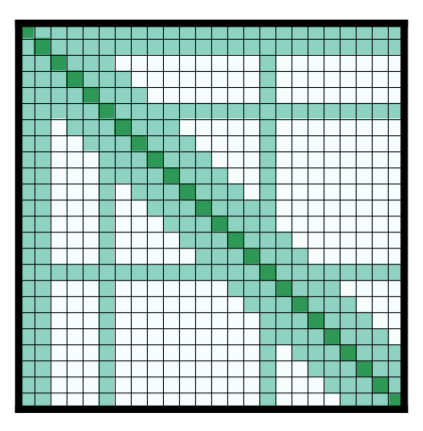

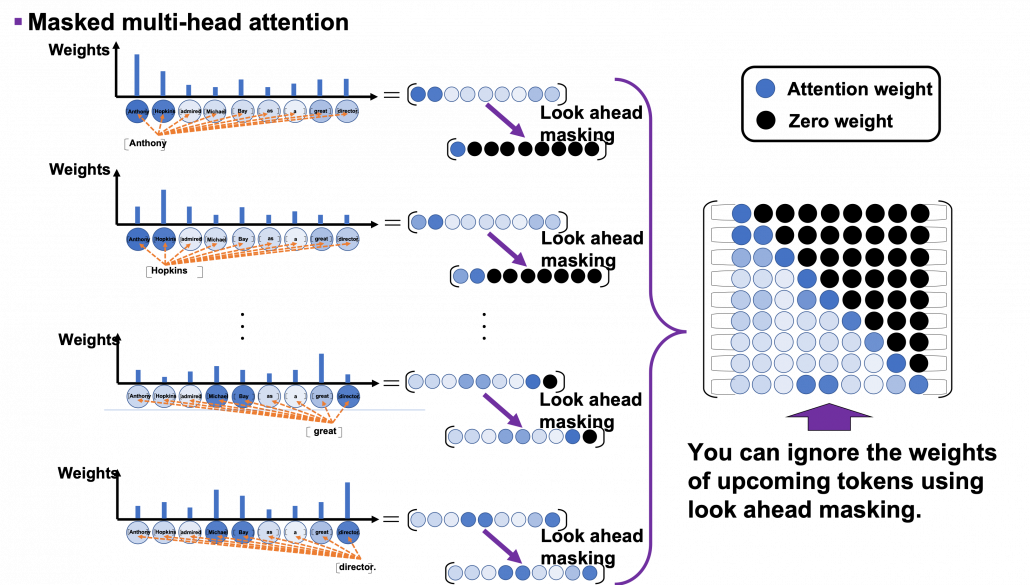

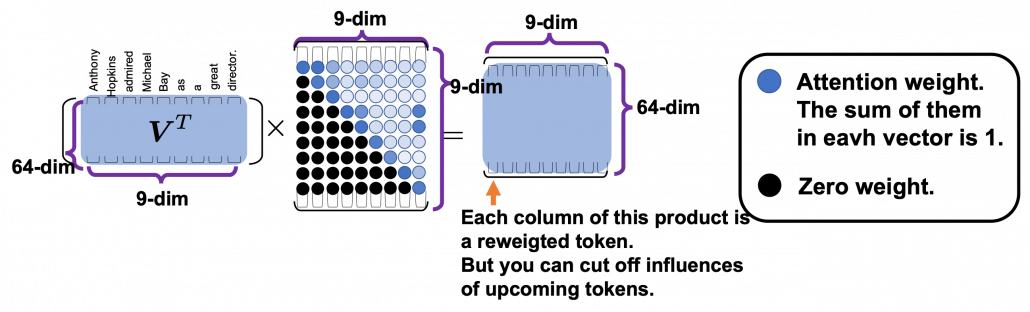

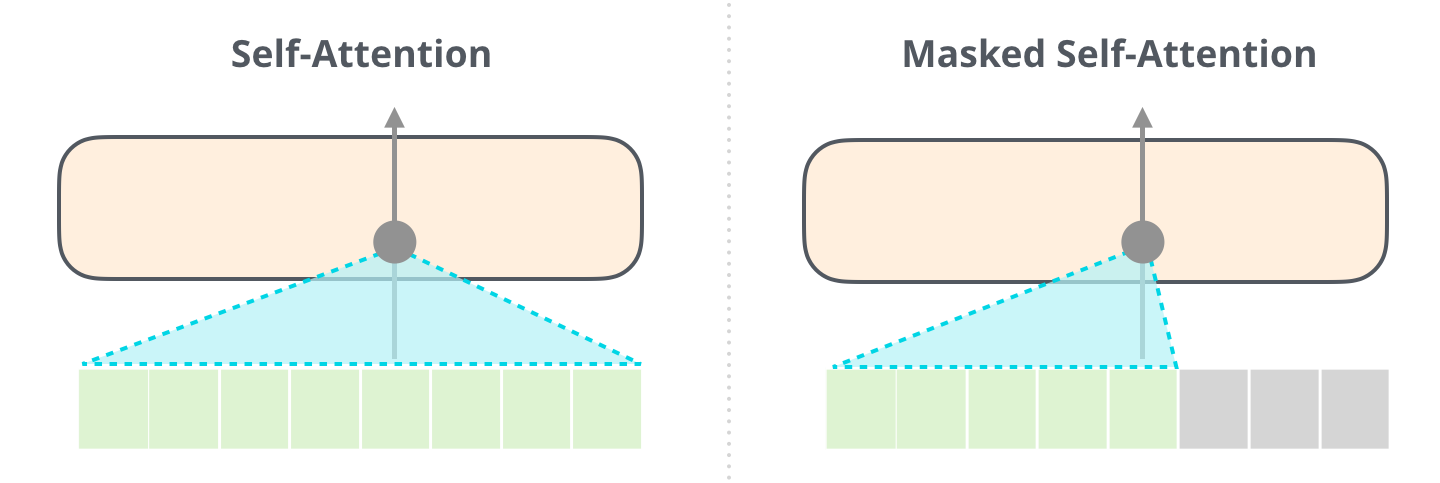

Masking in Transformers' self-attention mechanism | by Samuel Kierszbaum, PhD | Analytics Vidhya | Medium

Generation of the Extended Attention Mask, by multiplying a classic... | Download Scientific Diagram

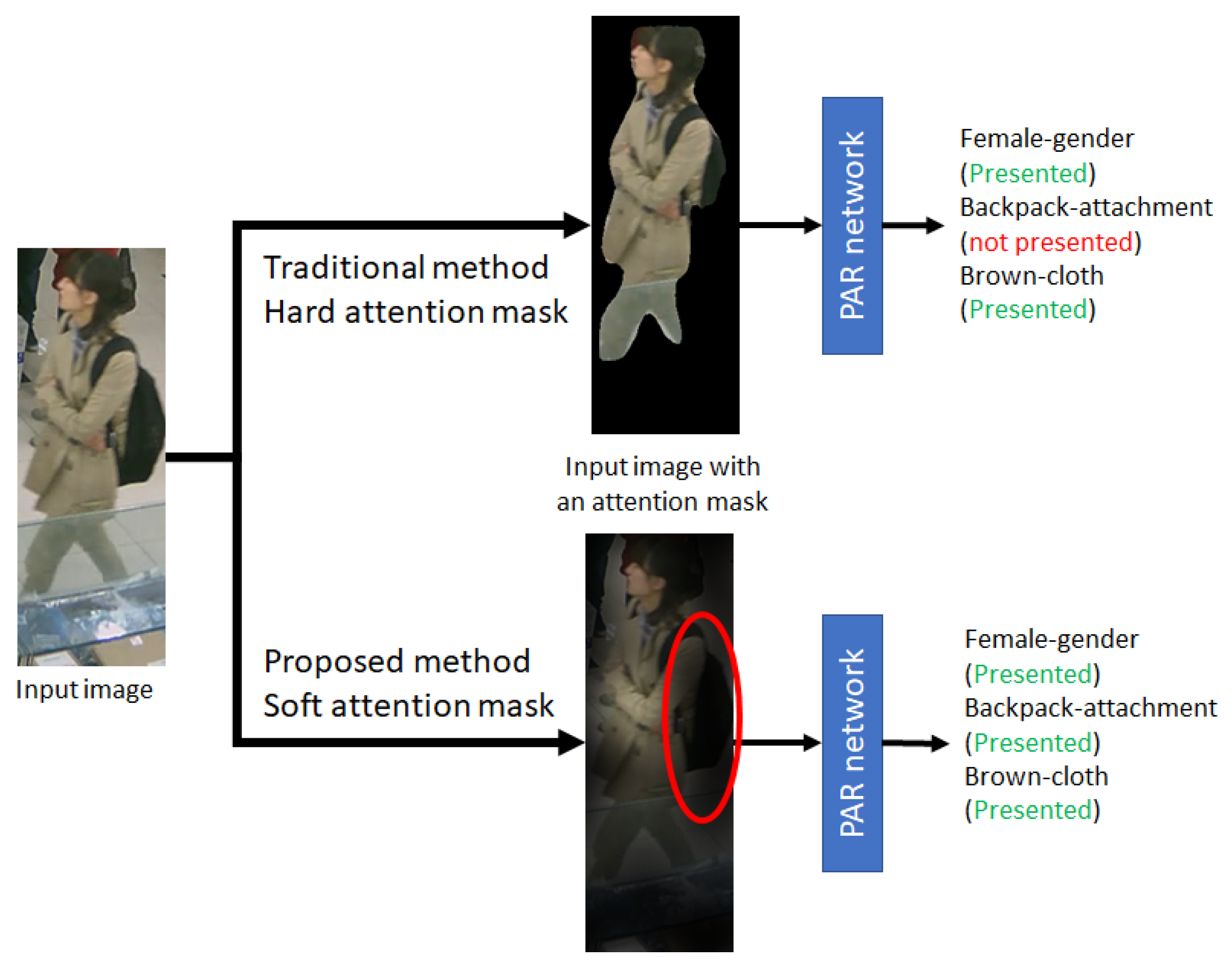

Two different types of attention mask generator. (a) Soft attention... | Download Scientific Diagram

J. Imaging | Free Full-Text | Skeleton-Based Attention Mask for Pedestrian Attribute Recognition Network

Four types of self-attention masks and the quadrant for the difference... | Download Scientific Diagram

Positional encoding, residual connections, padding masks: covering the rest of Transformer components - Data Science Blog

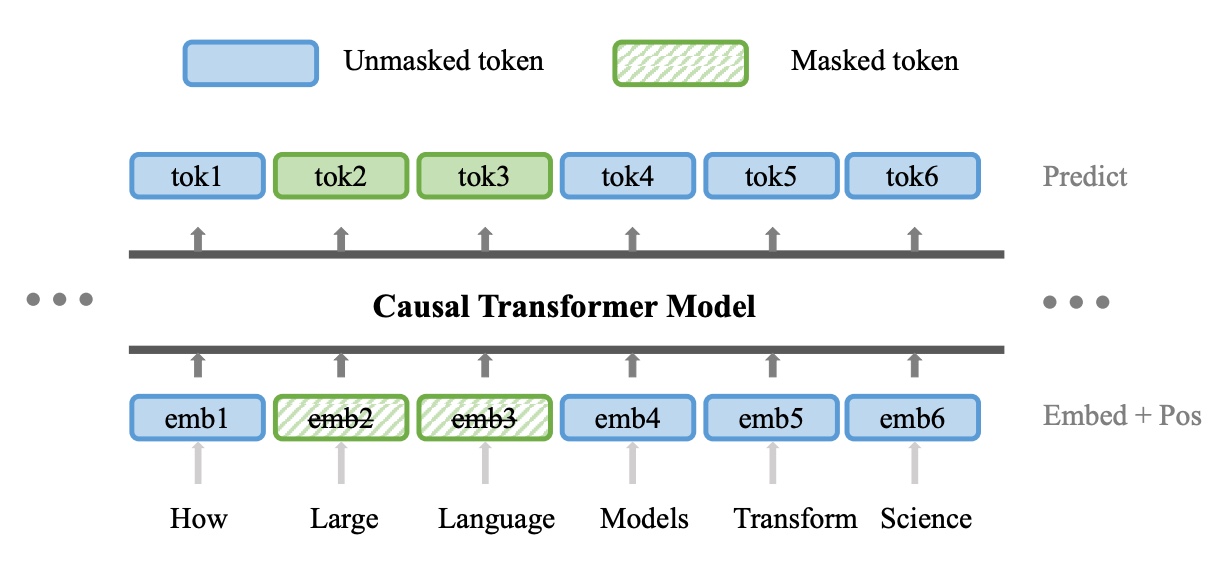

Hao Liu on Twitter: "Our method, Forgetful Causal Masking(FCM), combines masked language modeling (MLM) and causal language modeling (CLM) by masking out randomly selected past tokens layer-wisely using attention mask. https://t.co/D4SzNRzW06" /

![PDF] Masked-attention Mask Transformer for Universal Image Segmentation | Semantic Scholar PDF] Masked-attention Mask Transformer for Universal Image Segmentation | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/658a017302d29e4acf4ca789cb5d9f27983717ff/3-Figure2-1.png)